Taolin Zhang

Motto: 路漫漫其修远兮,吾将上下而求索 (The way was long and wrapped in gloom did seem, as I urged on to seek my vanished dream.)

Hi THERE (*^▽^*) Currently, I am a Tenure-Track Lecturer at Hefei University of Technology worked in Lab for Media Computing (LMC) since Feb. 2025. Previously, I have been an AI researcher in AAIG for about 2 years. I have obtained my Ph.D degree from East China Normal University in 07/2023, supervised by Prof. Xiaofeng He and Alibaba Algorithm Expert Chengyu Wang. My research interests are natural language processing and multimodal applications, with a focus on knowledge-enhanced neural models and their applications in information extraction and question answering. I did my bachelors at school of computer science, Shanxi University, advised by Prof. Hongye Tan.

Email / Google Scholar / Zhi Hu / DBLP

News

Education

Work Experience

Recent Projects

Building a series of large models based on QWEN in the field of content security, "Yu Feng", to solve the high difficulty and risk challenges of audio, video, graph, text, multimodal, and multilingual scenarios.

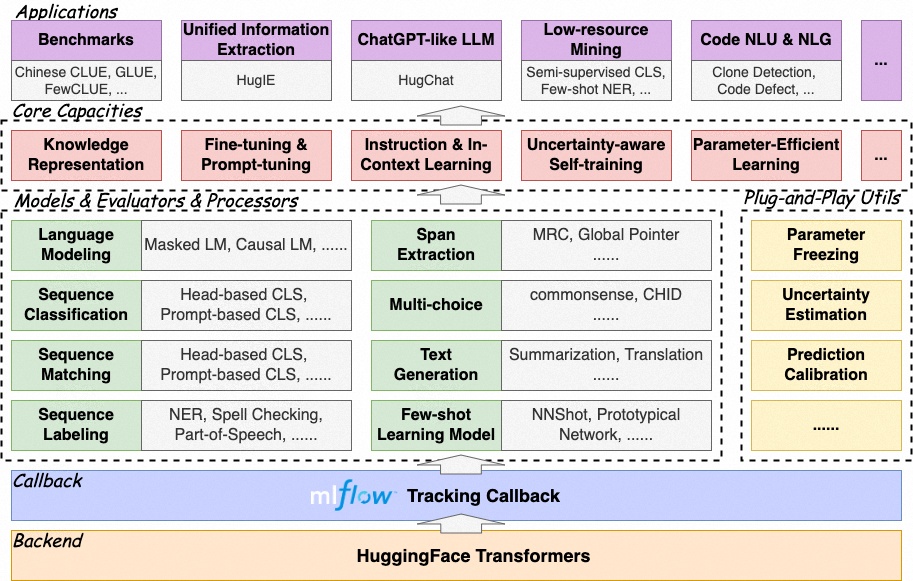

EasyNLP is an easy-to-use NLP development and application toolkit in PyTorch, first released inside Alibaba in 2021. It is built with scalable distributed training strategies and supports a comprehensive suite of NLP algorithms for various NLP applications. EasyNLP integrates knowledge distillation and few-shot learning for landing large pre-trained models, together with various popular multi-modality pre-trained models. It provides a unified framework of model training, inference, and deployment for real-world applications. It has powered more than 10 BUs and more than 20 business scenarios within the Alibaba group. It is seamlessly integrated to Platform of AI (PAI) products, including PAI-DSW for development, PAI-DLC for cloud-native training, PAI-EAS for serving, and PAI-Designer for zero-code model training.

In HugNLP, we provide some popular transformer-based models as backbones, such as BERT, RoBERTa, GPT-2, etc. We also release our pre-built KP-PLM, a novel knowledge-enhanced pre-training paradigm to inject factual knowledge and can be easily used for arbitrary PLMs. Apart from basic PLMs, we also implement some task-specific models, involving sequence classification, matching, labeling, span extraction, multi-choice, and text generation. Notably, we develop standard fine-tuning (based on CLS Head and prompt-tuning models that enable PLM tuning on classification tasks. For few-shot learning settings, HugNLP provides a prototypical network in both few-shot text classification and named entity recognition (NER).

Research

I'm interested in devleoping knowledge-enhanced neural models for natural language processing and multimodal (e.g. pre-trained models, information extraction, and question answering).

Conference Papers (*: equal contribution)

- UniEdit: A Unified Knowledge Editing Benchmark for Large Language Models

Qizhou Chen, Dakan Wang, Taolin Zhang, Zaoming Yan, Chengsong You, Chengyu Wang, Xiaofeng He

NeurIPS 2025 - BELLE: A Bi-Level Multi-Agent Reasoning Framework for Multi-Hop Question Answering

Taolin Zhang, Dongyang Li, Qizhou Chen, Chengyu Wang, Xiaofeng He

ACL 2025 - Lifelong Knowledge Editing for Vision Language Models with Low-Rank Mixture-of-Experts

Qizhou Chen, Chengyu Wang, Dakan Wang, Taolin Zhang, Wangyue Li, Xiaofeng He

CVPR 2025 - Attribution Analysis Meets Model Editing: Advancing Knowledge Correction in Vision Language Models with VisEdit

Qizhou Chen, Taolin Zhang, Chengyu Wang, Xiaofeng He, Dakan Wang and Tingting Liu

AAAI 2025 (Oral) - Lifelong Knowledge Editing for LLMs with Retrieval-Augmented Continuous Prompt Learning

Qizhou Chen*, Taolin Zhang*, Xiaofeng He, Dongyang Li, Chengyu Wang, Longtao Huang and Hui Xue

EMNLP 2024 - R4: Reinforced Retriever-Reorder-Responder for Retrieval-Augmented Large Language Models

Taolin Zhang*, Dongyang Li*, Qizhou Chen, Chengyu Wang, Longtao Huang, Hui Xue, Xiaofeng He and Jun Huang

ECAI 2024 - DAFNet: Dynamic Auxiliary Fusion for Sequential Model Editing in Large Language Models

Taolin Zhang*, Qizhou Chen*, Dongyang Li, Chengyu Wang, Xiaofeng He, Longtao Huang, Hui Xue and Jun Huang

ACL findings 2024 - On the Role of Long-tail Knowledge in Retrieval Augmented Large Language Models

Dongyang Li*, Junbing Yan*, Taolin Zhang*, Chengyu Wang, Xiaofeng He, Longtao Huang, Hui Xue and Jun Huang

ACL 2024 - KEHRL: Learning Knowledge-Enhanced Language Representations with Hierarchical Reinforcement Learning

Dongyang Li*, Taolin Zhang*, Longtao Huang, Chengyu Wang, XIAOFENG HE and Hui Xue

COLING 2024 - UniPSDA: Unsupervised Pseudo Semantic Data Augmentation for Zero-Shot Cross-LDingual Natural Language Understanding

Dongyang Li*, Taolin Zhang*, Jiali Deng, Longtao Huang, Chengyu Wang, XIAOFENG HE and Hui Xue

COLING 2024 - TRELM: Towards Robust and Efficient Pre-training for Knowledge-Enhanced Language Models

Junbing Yan, Chengyu Wang, Taolin Zhang, XIAOFENG HE, jun huang, Wei Zhang, Longtao Huang and hui xue

COLING 2024 - CIDR: A Cooperative Integrated Dynamic Refining Method for Minimal Feature Removal Problem

Qian Chen, Taolin Zhang, Dongyang Li, Xiaofeng He

AAAI 2024 - Learning Knowledge-Enhanced Contextual Language Representations for Domain Natural Language Understanding

Taolin Zhang, Ruyao Xu, Chengyu Wang, Zhongjie Duan, Cen Chen, Minghui Qiu, Dawei Cheng, Xiaofeng He, Weining Qian

EMNLP 2023 - From Complex to Simple: Unraveling the Cognitive Tree for Reasoning with Small Language Models

Yan Junbing, Chengyu Wang, Taolin Zhang, Xiaofeng He, Jun Huang, Wei Zhang

EMNLP 2023 - OnMKD: An Online Mutual Knowledge Distillation Framework for Passage Retrieval

Jiali Deng, Dongyang Li, Taolin Zhang, Xiaofeng He

NLPCC 2023 - Knowledge-Enhanced Prototypical Network with Structural Semantics for Few-Shot Relation Classification

Yanghu Li, Taolin Zhang, Dongyang Li, Xiaofeng He

PAKDD 2023 (Oral) - Revisiting and Advancing Chinese Natural Language Understanding with Accelerated Heterogeneous Knowledge Pre-training

Taolin Zhang, Junwei Dong, Jianing Wang, Chengyu Wang, Ang Wang, Yinghui Liu, Jun Huang, Yong Li, Xiaofeng He

EMNLP 2022 - EasyNLP: A Comprehensive and Easy-to-use Toolkit for Natural Language Processing

Chengyu Wang, Minghui Qiu, Taolin Zhang, Tingting Liu, Lei Li, Jianing Wang, Ming Wang, Jun Huang, Wei Lin

EMNLP 2022 - HiCLRE: A Hierarchical Contrastive Learning Framework for Distantly Supervised Relation Extraction

Li Dongyang*, Taolin Zhang*, Nan Hu, Chengyu Wang, Xiaofeng He

ACL findings 2022 - DKPLM: Decomposable Knowledge-enhanced Pre-trained Language Model for Natural Language Understanding

Taolin Zhang, Chengyu Wang, Nan Hu, Minghui Qiu, Chengguang Tang, Xiaofeng He, Jun Huang

AAAI 2022 - HfGCN: Hierarchical fused GCN for Joint Entity and Relation Extraction

Wei Nong, Taolin Zhang, Shuangji Yang, Nan Hu, Xiaofeng He

ICBK 2021 - HORNET: Enriching Pre-trained Language Representations with Heterogeneous Knowledge Sources

Taolin Zhang, Zerui Cai, Chengyu Wang, Peng Li, Yang Li, Minghui Qiu, Chengguang Tang, Xiaofeng He, Jun Huang

CIKM 2021 - HAIN: Hierarchical Aggregation and Inference Network for Document-Level Relation Extraction

Nan Hu, Taolin Zhang, Shuangji Yang, Wei Nong, Xiaofeng He

NLPCC 2021 - EMBERT: A Pre-trained Language Model for Chinese Medical Text Mining

Zerui Cai, Taolin Zhang, Chengyu Wang, Xiaofeng He

APWEB 2021 - SaGCN: Structure-Aware Graph Convolution Network for Document-Level Relation Extraction

Shuangji Yang, Taolin Zhang, Danning Su, Nan Hu, Wei Nong, Xiaofeng He

PAKDD 2021 - SMedBERT: A Knowledge-Enhanced Pre-trained Language Model with Structured Semantics for Medical Text Mining

Taolin Zhang, Zerui Cai, Chengyu Wang, Minghui Qiu, Bite Yang, Xiaofeng He

ACL 2021 - Knowledge-Empowered Representation Learning for Chinese Medical Reading Comprehension: Task, Model and Resources

Taolin Zhang, Chengyu Wang, Minghui Qiu, Bite Yang, Xiaofeng He, Jun Huang

ACL findings 2021

Journal Papers:

- A Short Survey on Small Reasoning Models: Training, Inference, Applications and Research Directions

Chengyu Wang*, Taolin Zhang*, Richang Hong, Jun Huang

Frontiers of Computer Science 2025 ( Selected As Free OA )